Frontiers in Theoretical Physics

The epic quest to comprehend the ultimate nature of matter and force has entered a new era of uncertainty – and excitement – unparalleled in the past 100 years.

The Researchers

At the dawn of the 20th century, the hard-won self-assurance of classical science was shattered by a series of bewildering revelations about the atom that were inexplicable by contemporary ideas. Simultaneously, the geometry of space and the duration of time itself were suddenly revealed to be eerily inconstant, barely resembling the comfortable notions that had served scientists for centuries.

Fortunately, theoretical physics thrives on enigma, and it eventually overcame those problems through two intellectual upheavals as profound as any in the history of human thought: the quantum revolution and the theory of relativity.

Both have performed supremely well, giving civilization unprecedented insight into – and unparalleled command of – matter and energy. But they are no longer adequate to explain fully what we know of reality.

Physics now requires a new set of categories to describe the contents of the cosmos. Science and technology alike are waiting for the mystifying world of quantum phenomena to be controlled and put to use. And long-term progress demands that the two hugely successful but basically incompatible theories of general relativity and quantum mechanics must be reconciled and unified on a dimensional scale that extends from a trillionth of a trillionth the size of an atom to the outermost extent of the universe.

Those challenges are every bit as daunting as the ones physics faced 100 years ago. But today’s theorists are already making headway on several fronts.

Beyond the Standard Model

For decades, physicists have been expanding and refining a magnificent blueprint, known as the Standard Model, which relates the characteristics of 12 known fundamental matter particles (six heavy and six light, termed “fermions” in the aggregate) to three elementary forces (strong, weak and electromagnetic) that affect them, and to five force-carrying particles called “bosons.”

It is the most accurate theory ever devised; many of its predictions are confirmed to better than one part in a billion. It has made much of modern life possible, notably including the transistor, the laser and the light-emitting diode, to name only a few. It is one of mankind’s paramount achievements. And it is conspicuously deficient.

At the largest spatial scales, the Standard Model’s present inventory of entities cannot account for more than 20 percent of the mass contained in galaxies. The remaining four-fifths – invisible to every existing detection device, but clearly present from its effects on galactic shape and rotation – is in the form of some mysterious stuff called “dark matter” for want of a better term. Moreover, the model’s fundamental forces cannot explain the new-found “dark energy” that is accelerating cosmic expansion and that apparently makes up fully three-fourths of the total mass-energy content of the universe.

At the smallest scales, the Standard Model does not provide a theoretical rationale for the peculiar values and range of elementary particle masses, which differ by a factor of as much as 100 billion – even though their sizes (if, in fact, they have any dimensions at all) are virtually identical down to the current limits of observation at 10-18 meter.

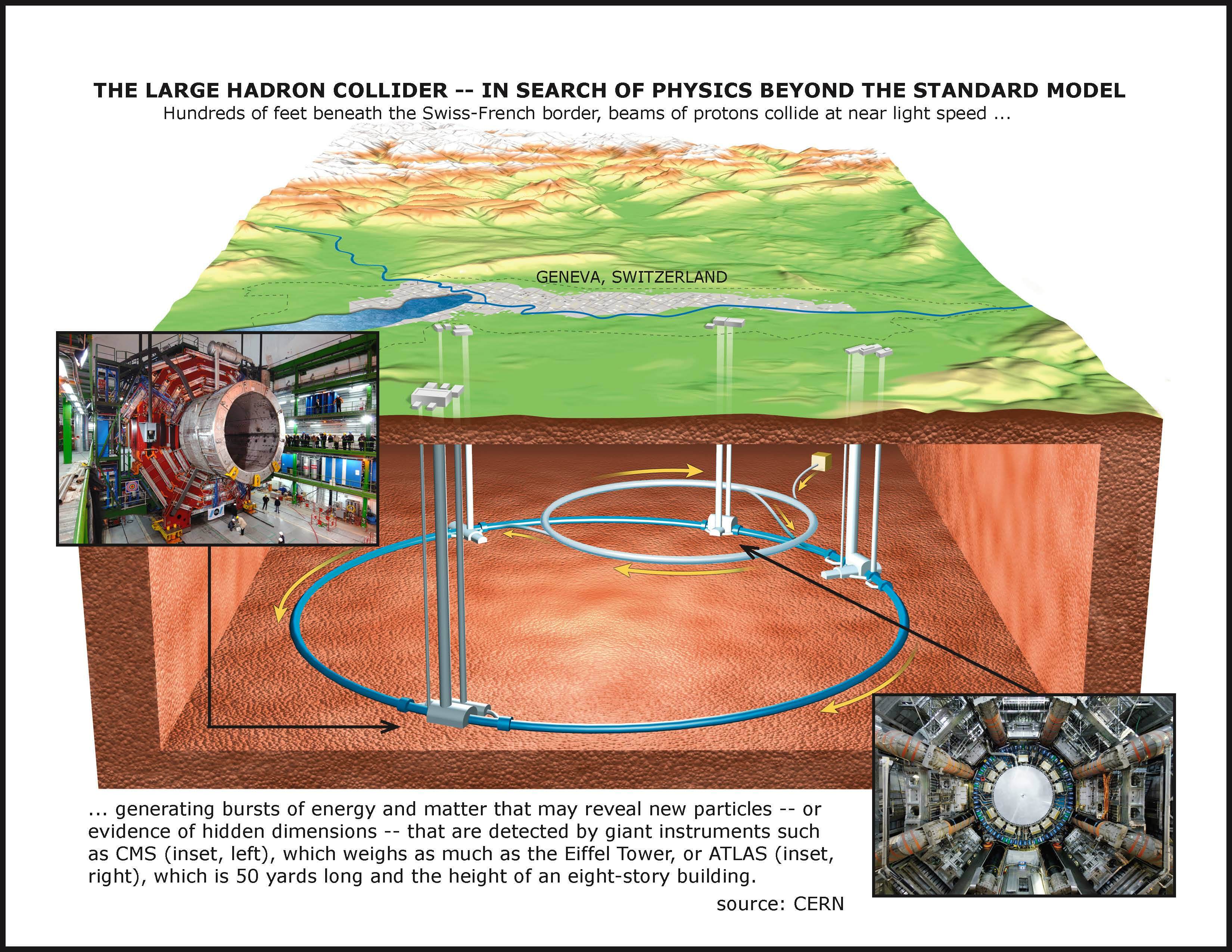

Theorists now generally agree that the property of mass is conferred upon particles when they interact with an all-pervasive but so far undetected field named for British physicist Peter Higgs, who postulated it. One of the principal goals of the Large Hadron Collider (LHC) in Switzerland, the world’s most powerful accelerator, is to detect the Higgs field’s hypothesized carrier particle, the Higgs boson.

But the Higgs field is something of a late addition to the Standard Model, like an outrigger attached to stabilize a canoe, and it does not provide a complete account of the perplexing particle masses. There is, however, a class of theoretical modifications to the Standard Model that can make the mass problem more tractable, and which removes several other difficulties as well.

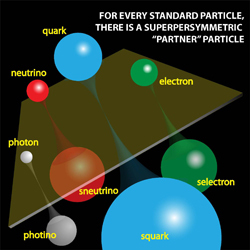

Such theories, collectively called supersymmetry, directly attack a central, stubborn question, namely, why should there be completely different sets of rules for fermions (matter particles) and bosons (force-carrying particles)?

Nature seems suffused with the principle of symmetry. That is, the basic aspects of physical phenomena are unchanged if, for example, every particle (such as an electron) involved is interchanged with its counterpart (a positron), or if the entire ensemble of objects is rotated, and so forth. There is a vital exception: Certain symmetry violations, acting shortly after the Big Bang, appear to be responsible for the fact that we live in a universe of matter and not simply radiation.)

So at the deepest level, it is reasonable to assume that each fermion has a heretofore unseen bosonic superpartner, and each boson has a corresponding fermion with which it is interchangeable at some energy scale. Superparticles would not have been observed yet, theorists argue, because they are so massive that they could not have been generated in particle accelerators prior to the LHC.

Supersymmetry has several advantages over the unadorned Standard Model, including a plausible candidate for “dark matter.” Superpartner particles would rapidly break down into successively less massive forms until they reached the lightest supersymmetric particle (LSP). Those would have nothing to decay into, so they would be stable. LSPs would exist throughout the universe, serving an invisible but essential role as dark matter in galaxies and clusters of galaxies.

“For over two centuries, people have wanted to unify our understanding of the forces of nature, to have one basic force rather than several,” says theorist Gordon Kane of the University of Michigan. “Supersymmetry allows us to do that. In the Standard Model, we cannot understand how the universe could begin in a burst of energy released in the form of particles, with equal numbers of particles and antiparticles, and quickly evolve into our universe made of matter but not antimatter. Supersymmetric theories are rich enough to provide explanations of this.”

Supersymmetry is also thoroughly consistent with another ongoing theoretical effort to explicate the Standard Model – the herculean task of understanding how quarks, the fundamental matter components of protons and neutrons, interact with the strong force carriers (gluons) that bind them together, and how gluons interact with one another. This theory, called quantum chromodynamics, explains why a single quark is never observed; under any but the most extreme conditions, they are always tightly bound into pairs or triplets. Recent experimental results, however, suggest that in a stupendously high-energy collider environment individual quarks and gluons can exist unconfined in a seething plasma. Definitive evidence of such a fluid would be a powerful confirmation of Standard Model predictions.

The Second Quantum Revolution

Meanwhile, quantum mechanics is poised to undergo a transformation – moving from characterization of quantum states to their control and utilization, with potentially explosive repercussions for science and technology. If the weird, counterintuitive behavior of quantum objects can be harnessed for computation, it will not simply enable much faster calculations and data processing. It could radically change the very nature of computing.

For example, theory has pointed to a means of radically changing the way information is stored and processed. In ordinary electronics, data take the form of electric charges, the movement of which is controlled by transistors. But in addition to charge, electrons also have a peculiar quantum property called “spin,” a sort of angular momentum arising from the fact that electrons behave as if they were tiny magnets rotating around an axis. Like the on-or-off, 0-or-1 binary nature of electronic bits, spin is two-valued, either “up” or “down.” But compared to charge-based systems, spin can be switched between states incredibly fast with a minuscule amount of energy. So information stored in spin states, and processed by “spintronics,” offers the prospect of much higher speeds at much lower power than even the best of today’s computers.

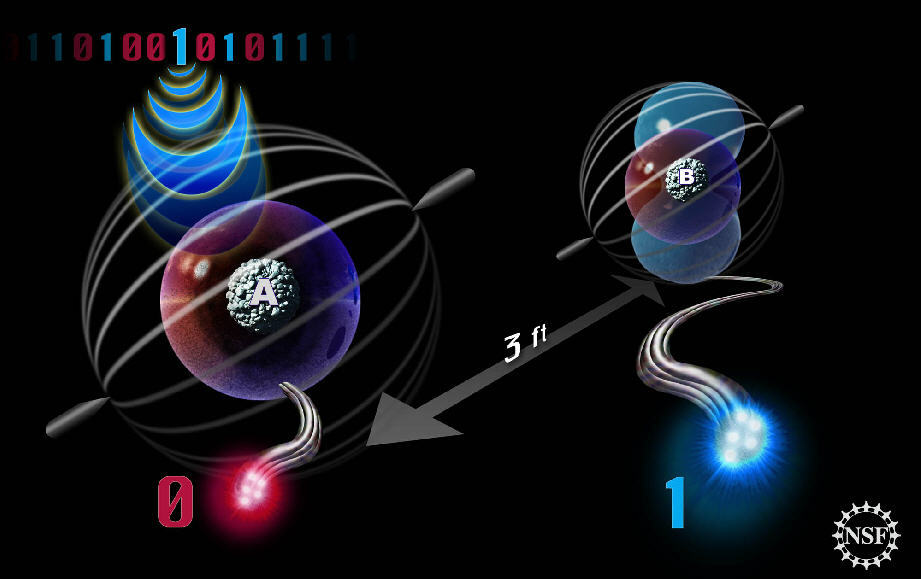

That improvement, however, pales in comparison to the possibilities of a genuinely quantum computer that exploits a phenomenon called “superposition”: the quality of being in multiple states at the same time. That is not possible in the classical world we inhabit. All objects have definite characteristics, specific locations and particular properties. Things are red or blue, here or there, hot or cold. So conventional computers represent information in the form of strings of binary digits, or “bits,” each of which is either 0 or 1, on or off, high or low voltage.

But in the quantum realm, at the atomic scale and below, objects exist in a superposition of multiple conditions simultaneously. So a quantum bit (which physicists call a “qubit”) can be 0 and 1 at the same time. Only when a measurement is taken is an object abruptly forced to take on specific properties. It is somewhat analogous to flipping a coin. As it spins in the air, the coin is neither heads nor tails. It won’t be one or the other until it lands.

In the quantum world, however, it is also possible to arrange for two objects to be “entangled” – to have their superpositions inextricably linked in such a way that the relationship between their properties is certain even though the properties themselves are unknowable until they are measured. So if two coins could somehow be entangled, Person A could flip one coin on Earth and Person B could flip the other coin simultaneously on Mars. If the Earth coin came up heads, then the Mars coin would always necessarily come up tails, and vice versa.

Theorists have shown that superposition and entanglement together can be harnessed to create a sort of ultra-parallel processing in which huge numbers of alternative solutions can be examined simultaneously. Certain kinds of tasks, such as factoring large numbers used in data encryption or searches through titanic databases, would be made exponentially faster.

But that may only be the beginning, because the nature of scientific research has changed in response to the burgeoning capability of computers. Many “experiments” are now conducted entirely in silico – that is, by means of highly sophisticated computer simulations and models that are employed in tasks from weather prediction to cosmology to the search for new pharmaceuticals, genetic interactions and cell biology.

“Computation increasingly defines the limit of what we know in science,” says theorist Michael Freedman of Microsoft’s Station Q at the University of California, Santa Barbara. “At some time this century, we expect to pass from classical to quantum,” he says, and “in a sense that’s the ultimate transition. We’ll be able to compute everything that can be computed.”

However, many obstacles lie in the way. Perhaps the most ominous is the fact that superposition is an extremely delicate condition, subject to collapse (called “decoherence”) at the most fleeting contact with the surrounding environment.

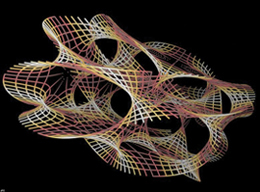

So theorists are devising methods to minimize or eliminate decoherence effects. One of the most ingenious entails encoding information in shapes – such as the braided patterns made in space and time by multiple interconnected quasiparticles called anyons – rather than in the quantum state of a single trapped atom or cluster of atoms. This notion, known as topological quantum computing, takes advantage of the fact that shapes are robust; they retain their distinguishing character despite local perturbations.

To a topologist, a cube, a pyramid and a sphere all belong to the same family of shapes because they can be smoothly transformed into one another without altering anything truly fundamental. Similarly, a donut and a coffee cup are identical shapes, but both belong to a completely different family from pyramids and cubes because every cup and donut has one hole (empty space) in its structure. Storing information in shapes thus allows a certain amount of accidental error (that is, localized deformity) without losing meaning. So if a cube gets banged up a little and comes to look more like a sphere, it won’t change its essential nature. That is how topological quantum computing may protect against errors.

“The idea is to find physical systems where the laws of physics prevent quantum decoherence and quantum error correction would be unnecessary,” says theorist and topological computing pioneer Sankar Das Sarma of the University of Maryland. “It is somewhat akin to fighting noise in life by either obtaining noise-correcting headphones (quantum error correction) or by going deaf (topological quantum computation). It turns out that certain quantum states have hidden geometrical properties which are robust in the sense the hole in a donut is robust -- a little bite does not destroy the hole!”

Strings as Ultimate Reality

The dimensions at which such effects occur may seem inconceivably tiny. But another group of theorists is obliged to focus on a scale 20 orders of magnitude smaller. They are embarked on a project as ambitious as any in the annals of physics: Reconciling the apparently incompatible theories of general relativity and quantum mechanics by subsuming both into an underlying reality that could unite all of physics into a “Theory of Everything.”

It has to be done. The theory of general relativity accurately describes the mighty curvature of space-time in the presence of mass. But it does not deal with local interactions among matter, forces and fields and provides no description about how gravity might be quantized, as is every other fundamental property. Conversely, quantum mechanics makes no provision for the effects of general relativity, and does not have a satisfactory theory of the graviton – the presumed force-bearing particle of gravitation.

“A grand challenge to theoretical physics,” says David Gross, Director of the Kavli Institute for Theoretical Physics, “is to reconcile the two pillars of modern physics --- Einstein's theory of general relativity that explains gravity and controls the large-scale structure of the universe, and quantum mechanics that operates at the atomic and nuclear scale and provides the framework for the understanding of elementary particles and the forces that act on them.”

Attempts to unify the two theories typically require a mind-wrenching plunge to spatial sizes around a billionth of a trillionth the size of a proton. That “Planck length,” theorists believe, is the minimum significant dimension in nature just as a single grain of sand is the smallest significant unit in the structure of a dune.

The Planck length (10-35 m) is the scale at which gravity is presumed to become quantized – and, indeed, at which many kinds of differences that are apparent at larger dimensions may simply vanish. Entities as drastically dissimilar as a quark, an electron and a photon may be exposed as mere vibrational variations on a single, utterly fundamental substrate: a “superstring.” Much as an individual violin string can generate a wide range of notes depending on its mode of oscillation, a superstring vibrating one way may appear as an electron, and vibrating another way appears as a quark.

This is not simply arcane conjecture. Nature is notoriously parsimonious. And, as in the case of supersymmetry, theorists reasonably assume, based on centuries of accumulated evidence, that the teeming diversity of observed reality results from only a very few truly fundamental processes. So it is logical to suspect that what we perceive as differences mask a deep underlying unity, or symmetry, in nature. At some size scale or energy level, it seems likely that all matter is essentially made of the same stuff, and that all forces are manifestations of a single “superforce.”

“String theory has been remarkably successful in achieving a reconciliation between general relativity and quantum mechanics,” Gross says. “But string theory, still in its infancy, offers much more; a true unification of all forces and particles as arising as different modes of vibration of a single string. Yet many problems remain and new ideas and concepts will be necessary. Therefore we await with great anticipation the start-up of the LHC which should produce exciting new discoveries and important new clues.”

Whatever wonders are revealed, they will tax the ingenuity of theorists. But if more than two millennia of steady progress is any guide, theory will be equal to the challenge.